Design Problem

Develop a user experience for exploring recipes to cook for people with visual impairment

Discovery

Who is the user? (User Demographics)

1. Vision should be less than 20/200 to be considered legally blind.

2. 7 million adults are visually impaired, 3.79 million female, 3.05 million male.

3. According to the Pew research, 52% of US adults and 50% of US adults with household income less than $30K own a smartphone and a computer respectively.

4. Data from WebAIM screen reader user survey shows 72% of respondents owned a mobile device and 78% owned a desktop computer.

What does this mean? (Inferences)

1. Approximately equal number of male and females suffer from visual impairment.

2. Ownership of mobile device and smartphone are equally likely.

3. People with visual impairment have difficulty in reading small text, and a considerable number of people are familiar with screen reader technology.

How will the user use this technology? (Context)

1. People are going to be using this technology in their households: while they are cooking food in the kitchen and when they are exploring different recipes in other parts of the household.

2. Users will need to reference the recipe while they are cooking food. Their hands might be soiled, thus the technology should be hands-free.

Questions that we don't know the answer to?

1. How often do the users cook?

2. How do people with high levels of visual impairment buy cooking ingredients?

3. What kinds of dishes do the users tend to make? Are they really complicated or relatively simple?

4. Do the users have access to Internet? If yes, is it economical to stream videos or have a video chat with another person?

5. Do the users cook alone or with help from other people (sighted or visually impaired)?

These are very important questions and can highly improve our insight into user stories. But considering the short timeline for this project, I had to work on the basis of the knowledge that I already had (mostly assumptions) and information that I could get off of the Internet.

Key features required

The technology should be

- portable (a smartphone app seems to be a good idea).

- hands-free allowing the user to interact with it while they are cooking.

Define

User Goals

1. Use the technology to find a recipe to cook a dish.

2. Navigate the recipe while cooking and be able to get information about the quantity of ingredient.

Business Goals

Make it very easy to use this app for first-time users

Ideation

Assuming that the user is blind, a wall-mounted device with an AI and a 3D camera that can understand user's environment (i.e. kitchen) and assist them while they are cooking. The AI can identify ingredients for the user, set automatic alarms for cooking time and basically act as a personal assistant while they are cooking.

Another idea was to have the same functionality as the above idea, but have this device mounted on a drone. This drone could also be used for other purposes, such as identifying ingredients while the user is shopping for ingredients, navigating while they are walking on the pavement.

Wall mounted device with an AI and a 3D camera

The problem with both of these ideas was that they were overkill for the problem that I was trying to solve. Finally, I settled on a Speech-based interface on a smartphone that the user could converse with.

My first thought was to use a platform that already exists as users might be familiar with it. So I thought of using Siri as a speech assistant. On further experimentation, I found Siri to be unreliable as it does not always detect the correct speech input. Since visually impaired people have different levels of impairments, a feature to input commands using text will be useful. Considering all these things I came up with the idea of using Facebook chat platform with the ability to both speak commands and type them using the on-screen keyboard.

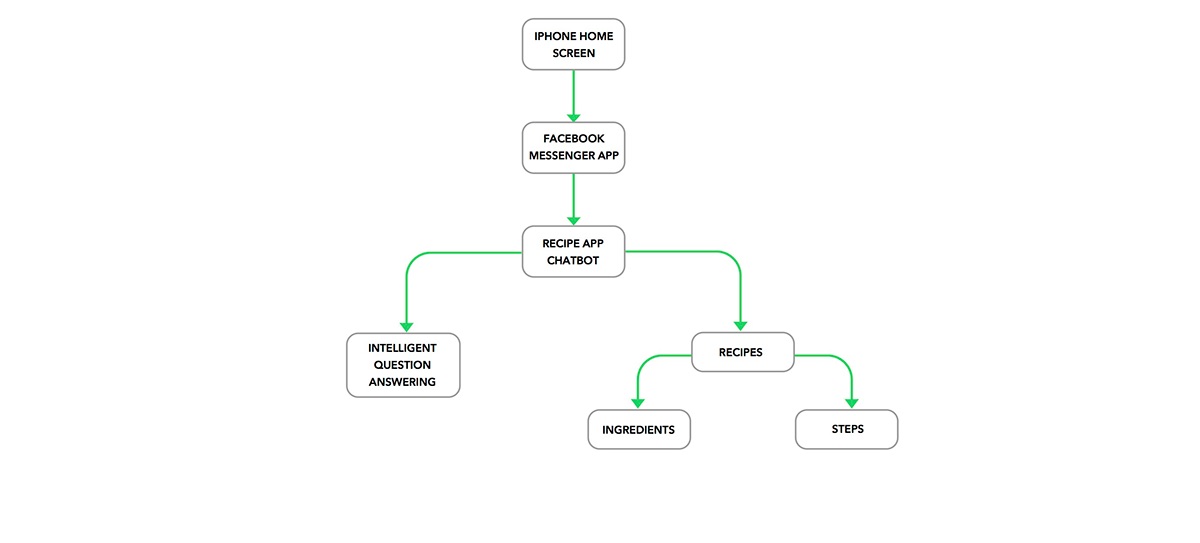

Information Architecture

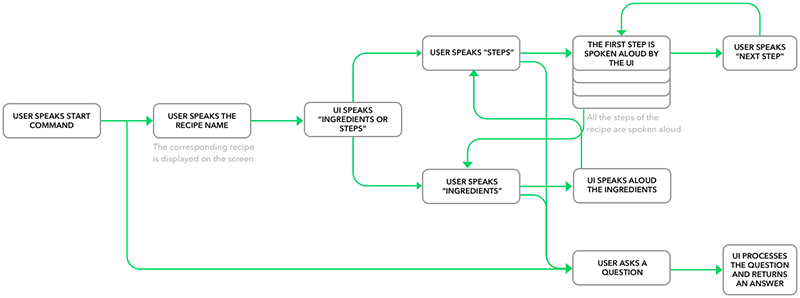

User Flow

Wireframing

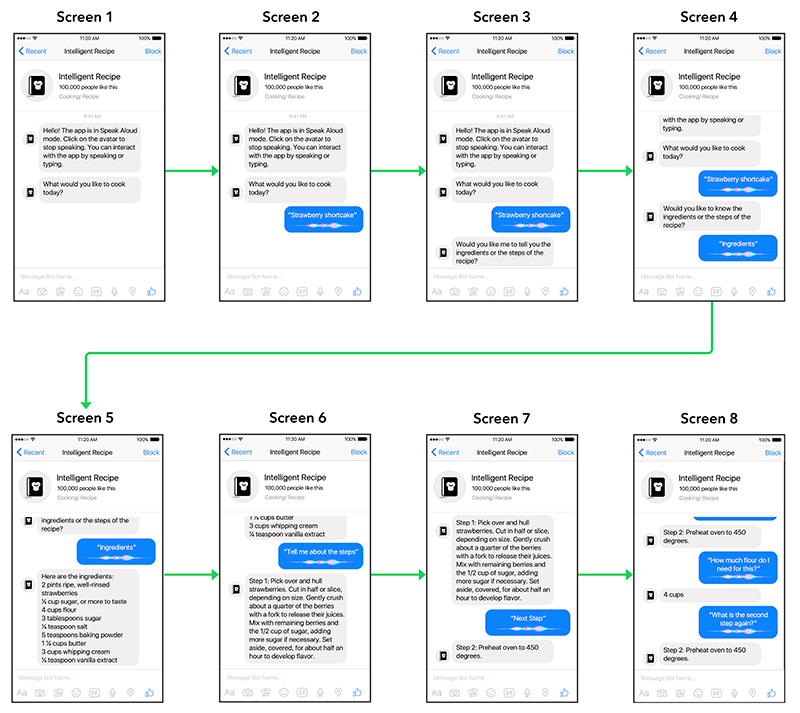

Wireframing of a voice-enabled chatbot is hard as there are no buttons to press to navigate to the next screen. There are no screens. Thus showing a progression of one way in which the conversation between the app and user might go seems to be a good idea.

Interactive Prototype

I used the Origami Studio from Facebook to create the interactive prototype. I really like the visual programming paradigm of the software and have extensively used it in my other projects. For the visual layout of the interactive prototype, I used the Chat UI Kit from InVision.

References

1. WebAIM screen reader user survey, http://webaim.org/projects/screenreadersurvey5/

2. U.S. Smartphone Use in 2015, http://www.pewinternet.org/2015/04/01/us-smartphone-use-in-2015/