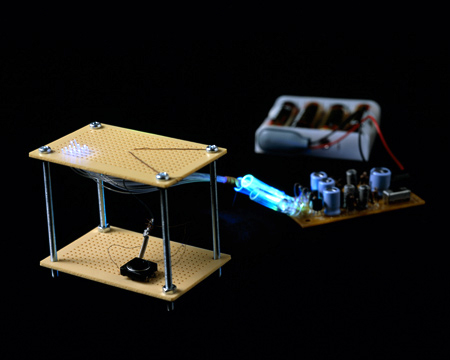

Lumen (photograph by Makoto Fujii, courtesy of AXIS Magazine)

Motivation

What would happen if physical objects around us are enabled to transform their shapes? What kind of interactions and applications would become possible for the devices that dynamically deform and change their physical appearance in response to the user actions?

In Lumen project, we are creating and investigating an interactive device that can dynamically change its shape to communicate information to the users.

Lumen overview

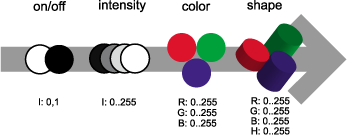

Conceptually we are investigating interfaces that can be generalized as an extension of traditional 2D bit-mapped RGB displays. Here each pixel has an additional attribute: height. We call this interaction design approach an RGBH display, where RGB is a color components and H is a height of a pixel. It can be viewed as the next step in the evolution of a pixel.

The Lumen, is an implementation of such RGBH display: it’s a low-resolution (13 by 13 pixel) bitmap display where each pixel can also physically move up and down. Therefore, the device can display both two-dimensional graphical images and moving, dynamic 3D physical shapes. These shapes can be viewed or touched and felt with the hands. Invisible to the user is a two-dimensional position sensor (SmartSkin) that is built into the Lumen surface adding interactivity to the device. It allows users to directly manipulate and modify shapes with their hands. Furthermore, by connecting several devices over the network we can establish a remote haptic communication link between several users.

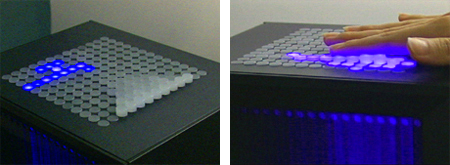

left: swimming fish with its fins "sticking up" from the water

right: users can create simple 3D traces with thier hands

right: users can create simple 3D traces with thier hands

Interaction

Dynamic physical shapes add another interactive dimension to flat 2D images. Shapes can be used to increase visual realism of 2D images by adding shape and real-time physical animation. For example, an animation of floating water can be displated by creating a physical 3D wave sweeping across the display surface. Similarly the movement of a virtual character can be made more realistic by displaying parts of a character though the shape: for example an image of a fish swimming across the display can be combined with a physical shape of its fins "sticking up" from the water. Alternatively, shape can be used to present fine irregularities in surface and create physical textures and patterns.

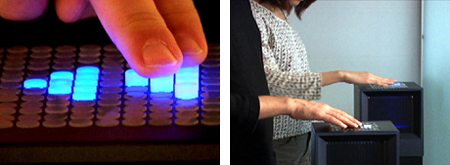

Lumen is also an inherently haptic display device, it allowes users to feel shapes of virtual images through touch. Indeed, an image can have a "real" 3D physical shape that can be felt by the user similarly to real objects. Furthermore, the users can physically create and manipulate virtual images using sensor installed in the Lumen surface: for example a physical wave can be drawn by moving a finger across the surface of Lumen. In another example, a physical interface elements, such as buttons, can be dynamically created and "pressed" by the users. By connecting two devices over the network we can allow several people to feel and touch each others remotely, establishing remote haptic link between people.

Finally, shape displays create new and beautiful aesthetics through 3D physical motion and images.

left: an animation of floating water is created with physical shape

right: users can both feel images with hands and see them visually

right: users can both feel images with hands and see them visually

left: dynamic 3D buttons can be created and "pressed"

right: haptic communication link between remote users can be established

right: haptic communication link between remote users can be established

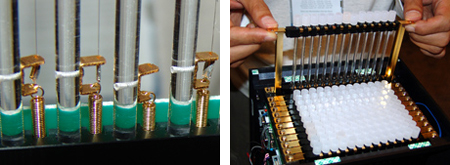

Construction

To create an image we are using arrays of light guides that direct light from the LEDs mounted on the bottom of Lumen. The tops of light guides are covered with matte cups that diffuse light and produce even illumination of each pixel.

Each pixel is actuated using thin shape-memory alloy (SMA) wires that are attached both to the light guides and the frame of the device. When electrical current is applied to the SMA wires, they heat up and shrink pushing light guides up. They return back to their initial length when cooled. To provide interaction, a custom two-dimentional capacitive sensor (SmartSkin) is integrated into the Lumen surface. The sensor computes the shape of the touch area and a distance from the hand to the device surface. This data is used to update the state of the Lumen device.

left: light guides and shape memory alloys are used in construction of Lumen

right: Lumen is built of building blocks where each block is an array of pixels

right: Lumen is built of building blocks where each block is an array of pixels

Future

Lumen is an early and conceptual interactive prototype that allows us to imagine and propose future interfaces. With the development of new, light-weight, energy-efficient and inexpensive actuator technologies we anticipate development of thin, fast and effective shape displays that can be used everyday in public displays, electronic appliances, living environments and mobile devices. Perhaps, the most prevailing robots in the future will not look like a human or animal, but will rather a dynamically reconfigures surfaces that would present information though images and dynamic physical shapes. Lumen is one of the first attempts to probe this future.

The first Lumen prototype; the main operation principles are the same as in the final version of the device (Photograph by Makoto Fujii, courtesy of AXIS Magazine).

Publications

Parkes, A., Poupyrev, I., Ishii, H. Designing Kinetic Interactions for Organic User Interfaces. Communications of the ACM 51(6). 2008: pp. 58-65 [PDF].

Poupyrev, I., Nashida, T., Okabe, M. Actuation and Tangible User Interfaces: the Vaucanson Duck, Robots, and Shape Displays. Proceedings of TEI'07. 2007: ACM: pp. 205-212 [PDF].

Poupyrev, I., T. Nashida, S. Maruyama, J. Rekimoto, and Y. Yamaji.Lumen: Interactive visual and shape display for calm computing. SIGGRAPH Conference Abstracts and Applications, Emerging Technologies, 2004: ACM [PDF].

[ Also, see exhibitions and media pages for more information. ]